Discover the opinion of António Branco, Professor at the Faculty of Science of Lisbon and General Director of PORTULAN CLARIN Research Infrastructure for Language Science and Technology, on the Albertina PT-* project.

Advances in Artificial Intelligence have been impressive, especially in their application to Language Technology. This progress is based on machine learning with so-called Large Language Models, such as GPT-3 or ChatGPT, which have been so much talked about recently.

These networks are gigantic—GPT-3, for example, has 175 billion connections between neurons. They capture linguistic regularities when trained in massive computational processes, on colossal volumes of linguistic data, text, or audio. In the case of GPT-3, 500 billion words were used in training.

Once trained, these models can be used for other linguistic tasks with unprecedented levels of quality, such as translation, conversation, speech transcription and subtitling, text and speech generation, content analysis and information extraction, etc. When integrated into broader systems, they are transforming diagnostics and healthcare, financial and legal services, gaming and entertainment, education, creativity and culture, and more.

Due to the size of the models, these processing tasks are available remotely as services. online, as is the case with search engines, and not like the spell checkers installed locally on our devices. Due to the size of the learning resources, these services are currently provided by the oligopoly of bigtechs, which can be counted on the fingers of one hand, with the capacity to access the colossal volumes of computing and data required for training.

Consequently, in the digital age, the use of language—with other human beings, organizations, services, or artificial devices—will never again occur without this pervasive and profound technological intermediation, which processes acts of communication and accesses their meaning.

We have enough experience with information search engines, for example, and their assumptions and impacts, to intuit the consequences of this technological intermediation on the daily use of language itself. Technological intermediation, in general, generates a digital trail of personal data beyond our control. The incessant technological intermediation of human language and communication, in particular, funneled into a small global oligopoly, creates alarming risks to individual and collective sovereignty.

The undesirable impacts of emerging technologies are mitigated with more and better technology, not less. Dispersing the provision of these services is crucial to counteracting the threat posed by their concentration. The answer, therefore, lies in fostering an innovation ecosystem that, alternatively, allows for timely access to the resources necessary for the appropriation and exploitation of Language Technology by the largest possible number of individuals and organizations—private and public, small and large, national and international.

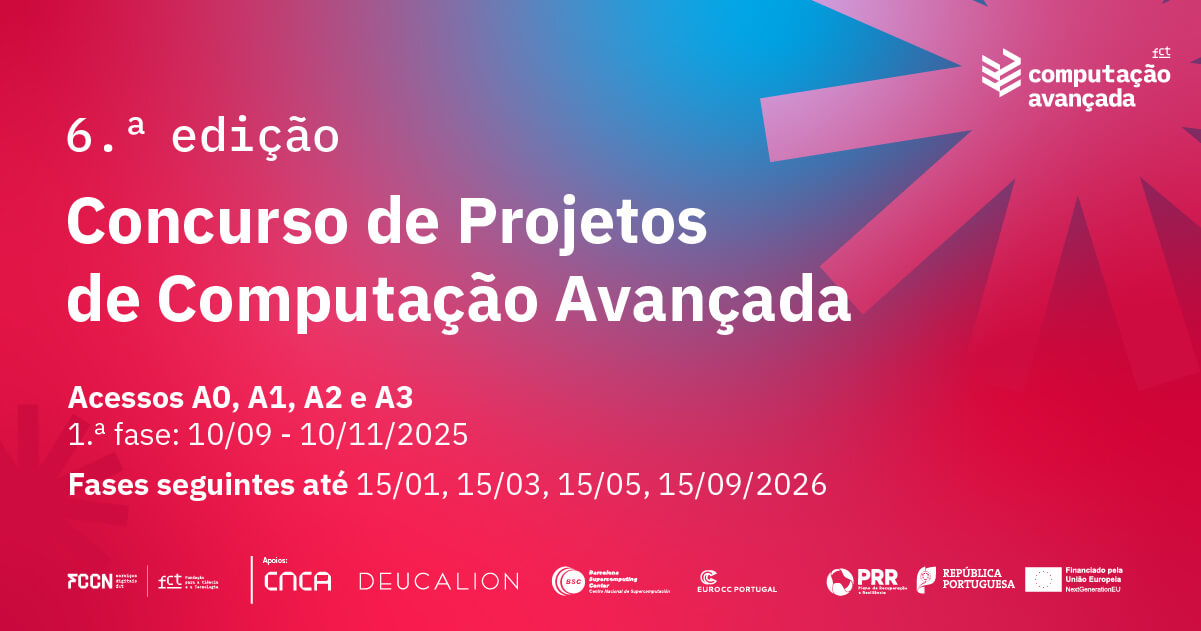

In this regard, the RNCA is already playing a role of the utmost importance, namely through the Advanced Computing Project Competition: Artificial Intelligence in the Cloud.

I coordinate one of the projects funded by the first edition of this competition, in which we seek to contribute to open AI and the technological preparation of the Portuguese language. One of the results of this project, which I report here, it's the Albertina PT-*. This is a foundational model developed specifically for the Portuguese language, both the European variant spoken in Portugal and the American variant spoken in Brazil.

As far as we know, with its 900 million parameters and its level of performance, it constitutes the current state of the art with regard to large foundational language models of the class encoder for this language, which are publicly available as open source, free of charge, and under an unrestricted license. A comprehensive presentation of their characteristics and implementation can be found in the paper accepted for publication in the proceedings of EPIA2023, the annual conference of the Portuguese Association for Artificial Intelligence.

This is just a first step towards the democratization of this technology, which is key to the future, and in promoting open generative AI, for which RNCA, I am sure, will continue to make an invaluable contribution.

___